2024 trends in speech recognition

2023 was undoubtedly the year generative AI (artificial intelligence) broke into the mainstream. The launch of ChatGPT at the end of 2022 kicked off a rush to find the limits of what new AI systems could do. Making images, writing code, creating poetry – the possibilities just kept growing. As 2024 begins, AI isn’t going anywhere. But the focus is now shifting from text and images to speech recognition.

Predicting where technology will go next is difficult, but predicting future AI trends is even trickier. The large language models (LLMs) that underpin generative AI are improving all the time, meaning something that was difficult for an AI to do last week could be trivially easy next week. So instead, we’re focusing on what’s on the horizon for the next 12 months.

AI assistants that are available now can create and transform text and images, all based on a simple prompt. But that familiar chat box interface may soon become a thing of the past as AI assistants learn to listen and speak as well as read and write.

AI speech recognition is set to drive major trends in the technology industry in the near future. So what exactly is it?

# What is speech recognition?

Speech recognition is the ability for a piece of software to turn human speech into text. When you ask Siri a question, or dictate a voice note into your iPhone, your device is using speech recognition to parse what you say. It’s important to note, however, that speech recognition is different from voice recognition and speech to text technology. See the table below for definitions of each.

Term | Definition |

|---|---|

Speech recognition | A feature that lets an app turn spoken words into text, and take actions based on that text. Also known as speech to text. |

Voice recognition | A security feature used to automatically identify an individual based on the sound of their voice. |

Text to speech | A feature allowing software to read written text aloud. |

One of the earliest examples of speech recognition is theIBM Shoebox computer from 1962, which could recognize 16 different English words. Back then, the distinctive sound of each word had to be encoded for the computer to be able to recognize it. AI assistants like Alexa and Siri are much smarter than that, but you’ve probably noticed they still have a fairly limited vocabulary – ask a question using a less common word and they’ll likely be confused.

In contrast, studies have shown that, even without being trained on the sounds of a specific language, modern LLMs are very accurate at speech recognition. Pairing these powerful speech recognition capabilities with an existing voice-based AI assistant would make for a much more capable app.

While speech recognition via speech to text is a major trend for AI, LLMs have also improved the opposite process – text to speech – and shows great potential for future innovations.

# Speech to text vs text to speech

Speech to text capability allows you to interact with an AI assistant with your voice, whereas text to speech allows the AI to talk back. Text to speech software has been a feature of many operating systems since the early 1990s, with Microsoft Sam from Windows 2000 as a typical example.

Text to speech functionality has mostly been associated with automated screen readers and other assistive technology, for people with visual impairments. Although text to speech voices have improved over time, their monotonous delivery and sometimes awkward pronunciation means they don’t sound like natural human speech.

However, AI assistants including ChatGPT, have been adding text to speech functionality with a much more natural cadence and speaking style. The sudden improvement in the quality of text to speech is another force shaping the future of AI, along with speech recognition.

# Global speech recognition market size

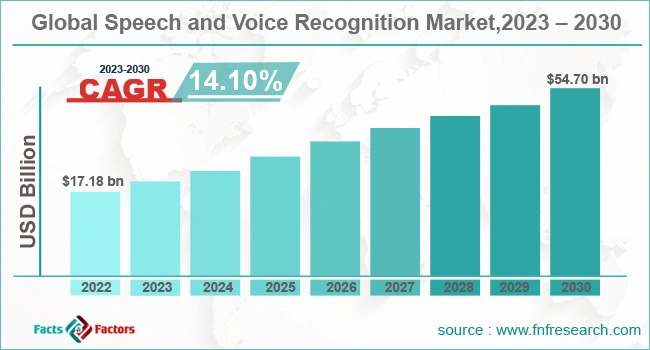

With all these advances in AI’s ability to understand natural spoken language, the market for speech recognition systems is heating up. Facts and Factors Research estimates the value of the global voice and speech recognition market will grow from $17.18 billion in 2022 to $54.70 billion by 2030, with a CAGR of 14.1%.

And, according to Facts and Factors Research, AI-driven speech recognition is driving that rapid growth:

“The emergence of artificial intelligence and the growing demand for digitization will open new growth opportunities for the global speech and voice recognition market…The artificial intelligence-based technology segment…is anticipated to dominate the industry expansion.”

So, how exactly will AI assistants drive all that growth? Over the next 12 months, these are the four big AI-driven speech recognition trends to watch out for.

# 4 key trends in speech recognition for 2024

# 1. Say goodbye to the AI chat box

Typing prompts into a chat-style text box has been the standard way to interact with AI since ChatGPT launched. But typing your commands out takes time. Newer AI tools are already offering alternative interfaces, including prewritten prompts and toolbars for fine-tuning the AI’s response.

With huge improvements in speech to text functionality, voice input is the next step for AI interaction. The chat box won’t disappear, it just won’t be the default – like how graphical user interfaces replaced command lines in modern operating systems.

# 2. AI voice assistants get more capable

LLMs are being integrated into all the major voice assistants, including Alexa, Google Assistant, and Siri, to help them understand a wider, more natural range of spoken commands. Microsoft is going a step further, completely replacing its legacy assistant, Cortana, with a new GPT-powered app called Microsoft Copilot, that can automate tasks for you within Windows.

You’ll soon be able to ask the assistant in your phone, car, or smart speaker to handle many more tasks – and, thanks to improved text to speech capabilities, your voice assistant should be able to answer you more naturally, too.

# 3. Platforms will get more accessible with AI

One of the major advantages of speech recognition is how it can boost accessibility. Better speech to text functionality means that automatically generated captions on video and audio content can be much more accurate. Auto captions on major social media apps, including YouTube, TikTok and Instagram, have been available for some time, but open source LLMs are rapidly democratizing this feature, making it available to smaller platforms as well.

The move away from the chat box, as discussed, will allow those who have difficulty using a keyboard and mouse to take advantage of speech recognition instead. Plus, improved speech recognition is also being integrated into operating systems, to make them more accessible as well.

# 4. AI assistants become true teammates

2024 is the year AI becomes the collaborative work colleague you didn’t know you needed. You can already get AI-generated meeting transcripts in platforms like Zoom, but AI is poised to go well beyond that. Using speech to text, AI assistants will be able to attend meetings on your behalf, take notes, and summarize the key points for you.

Google is already offering an early version of Duet AI, which can keep track of conversations in meetings, note down key action items, and even catch you up on what you missed if you joined late. That’s just the beginning – expect to see more AI assistants turning up in your meetings in 2024.

# Add leading-edge AI features to your app now

All these future advances in AI have one important thing in common: deeply integrating AI into existing platforms. That’s because to fully realize the benefits of future AI trends, AI can’t remain just a standalone tool – it needs to become an integral part of all the apps you use at a foundational level.

One of the most foundational building blocks of your content creation workflow is your rich text editor. The WYSIWYG editor is a key component of essential platforms, from your CMS to your customer relationship management software, and your instant messenger. Integrating an AI assistant into your rich text editor brings all the benefits of generative AI into your apps, at the point that they’re most needed.

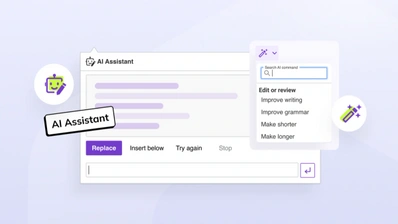

CKEditor’s AI Assistant feature works with market-leading generative AI models, including GPT-4 from OpenAI and Microsoft’s Azure AI. Use saved prompts and quick commands to summarize, translate, and rewrite existing text. Or use the familiar prompt window to get the AI to handle more complex tasks. As AI keeps improving over the next year, you can expect the AI Assistant to become more capable, too.

To access the AI Assistant feature, you need a CKEditor Commercial Licence. The license lets you pick and choose from our full range of Premium plugins, plus it removes GPL license restrictions, so you can use CKEditor in any commercial project.

Contact us today to bring the future of AI into your app.